Using Local Simple Runtime

Local Simple Runtime is a Docker-based local LOC environment. It offers the following benefits:

- Does not require login nor any cloud resources

- Supports session storage, local storage and event store

- Supports all agents

- Possible to read log messages and use local database/file services (except SMB)

A developer can first develop and test a data process in the local simple runtime, before moving to production deployment.

Be noted that the latest local runtime version is v0.5.1 where as the latest LOC version is v0.6.2. The agents from v0.6.2 are still compatible to v0.5.1.

The local runtime does not support managing any assets other than executing a data process.

Environment

You need to install Docker Desktop. See Setup CLI.

Create and Setup Local Profile

Create a profile /profiles/local.yaml at the root of your CLI workspace:

simpleRuntime:

image: public.ecr.aws/m0s8j1u6/saffron/simple-runtime:release-0.5.1

dockerNetwork: host

eventstoreEndpoint: http://127.0.0.1:8087

etcdEndpoints: http://127.0.0.1:2379

And set it as your default profile:

./loc profile set -f ./profiles/local.yaml -p local

./loc profile use local

Install and Start Local Service

Pull Data Process Image

If you are using this version of local simple runtime for the first time, open a terminal and pull the data process image:

docker pull public.ecr.aws/m0s8j1u6/saffron/simple-runtime:release-0.5.1

This is the actual loca runtime itself, but we need a few services to make it work.

Create Docker Compose File

Create a new file /local-runtime/docker-compose.yaml in CLI workspace with the following content:

version: "3.9"

networks:

saffron:

driver: bridge

volumes:

es01:

driver: local

services:

saffron-evenstore:

restart: "always"

image: public.ecr.aws/m0s8j1u6/saffron/eventstore:release-0.5.1

container_name: saffron-evenstore

ports:

- "8200:8200"

- "8087:8087"

- "8007:8007"

environment:

- SAFFRON_EVENTSTORE_API_ASSRESS=0.0.0.0

- SAFFRON_EVENTSTORE_API_PORT=8007

- SAFFRON_EVENTSTORE_API_AUTHORIZATION_SECRET=-----BEGIN PUBLIC KEY-----MIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEAmuzhqHVUKj0CVtnqCS1PbVOyq1TcyykKgXoBwQvPaF8N8zIHXUjCgwjmKbOK+SjLoLC7cgLWlwbV2u12J/9w2sTawbROOno7Td2OZte2qNLugsEf5RxGVKd4IZx5zshxTI/bwnYGvpyVWdbRGvtWMKpbkzkR7O8UumtUMdUl6h1iUKRXkAxoB2ge/CZUDCss643iS0KPO7gIowvcYFCKBX86G/+GBlIHcXWTIa2eEWuTx5T64lmSVJrlN1L6diZRlUIBq6JNuQv26ZJgNAO91DDcCLCxcQgLH5KqwzdXwz2jBOqL7ac1HAW/mov4oJrk7ytWVumoFJ+NlJlSZ+vd5wIDAQAB-----END PUBLIC KEY-----

- SAFFRON_EVENTSTORE_GRPC_ADDRESS=0.0.0.0

- SAFFRON_EVENTSTORE_GRPC_PORT=8087

- SAFFRON_EVENTSTORE_METRICS_ADDRESS=0.0.0.0

- SAFFRON_EVENTSTORE_METRICS_PORT=8200

- SAFFRON_EVENTSTORE_ELASTICSEARCH_ENDPOINT=http://es01:9200

- SAFFRON_EVENTSTORE_ELASTICSEARCH_USERNAME=

- SAFFRON_EVENTSTORE_ELASTICSEARCH_PASSWORD=

command:

- run

networks:

- saffron

depends_on:

- etcd01

- es01

es01:

image: docker.elastic.co/elasticsearch/elasticsearch:7.16.1

restart: "always"

container_name: es01

environment:

- xpack.security.enabled=false

- bootstrap.memory_lock=true

- discovery.type=single-node

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- es01:/usr/share/elasticsearch/data

ports:

- "9200:9200"

networks:

- saffron

kibana:

image: kibana:7.16.1

restart: "always"

container_name: kibana

volumes:

- ./kibana.yaml:/usr/share/kibana/config/kibana.yml

ports:

- "5601:5601"

networks:

- saffron

etcd01:

image: docker.io/bitnami/etcd:3.5.1-debian-10-r31

restart: "always"

container_name: etcd01

environment:

- ALLOW_NONE_AUTHENTICATION=yes

- ETCD_NAME=etcd01

- ETCD_INITIAL_ADVERTISE_PEER_URLS=http://etcd01:2380

- ETCD_LISTEN_PEER_URLS=http://0.0.0.0:2380

- ETCD_LISTEN_CLIENT_URLS=http://0.0.0.0:2379

- ETCD_ADVERTISE_CLIENT_URLS=http://etcd01:2379

- ETCD_INITIAL_CLUSTER_TOKEN=saffron-etcd-cluster

- ETCD_INITIAL_CLUSTER_STATE=new

ports:

- "2379:2379"

networks:

- saffron

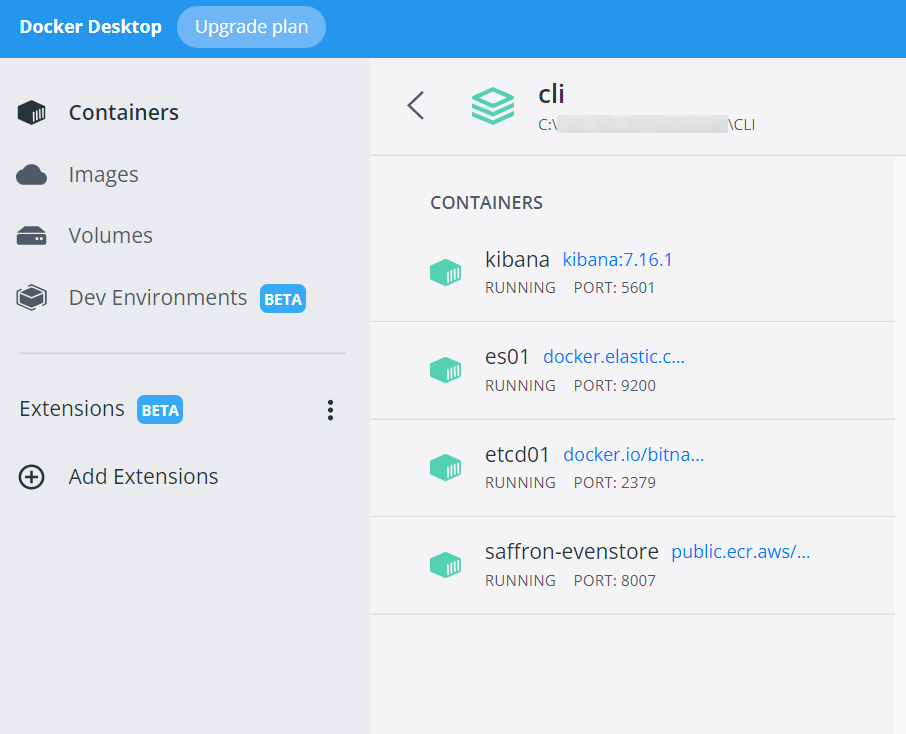

This file defines four containers:

- etcd for local storage

- Event store

- Elasticsearch for event store

- A Kibana web interface for Elasticsearch

Create Kibana Configuration File

Create a /local-runtime/kibana.yaml in the CLI workspace:

server.name: "kibana"

server.host: "0.0.0.0"

elasticsearch.hosts: [http://es01:9200]

xpack.security.enabled: true

elasticsearch.username: "elastic"

elasticsearch.password: "aa123456"

monitoring.enabled: false

Create Payload File

Create a /local-runtime/payload-local.yaml in the CLI workspace:

tasks:

- dataProcessIdentityContext:

permanentIdentity: 00000000-0000-0000-0000-000000000000

revision: 1

name: ""

taskId:

executionId: AAAAAAAAAAAAAAAAAAAAAA

id: AAAAAAAAAAAAAAAAAAAAAA

payload:

http:

apiGatewayIdentityContext:

id: 00000000-0000-0000-0000-000000000000

name: ""

apiIdentityContext:

id: 00000000-0000-0000-0000-000000000000

name: ""

requestId: "42"

scheme: https

version: HTTP/1.1

host: localhost

path: /hello

method: POST

headers:

Content-Type: "application/json"

query: "name=Arthur-Dent&age=42"

body:

name: "Arthur Dent"

age: 42

This is similar to the payload file in Simple Data Process Execution but we'll need this one to work.

Start Local Service

Make sure your Docker is up and running, then run the following command from the CLI workspace:

docker-compose -f ./local-runtime/docker-compose.yaml up -d

Wait a bit for the event store can be initialized properly.

These services will automatically start every time you restart your machine.

You can stop the services with

docker-compose -f ./local-runtime/docker-compose.yaml down

Execute a Data Process

- JavaScript

- TypeScript

./loc dp run-local <project name> -f ./local-runtime/payload-local.yaml --docker

./loc dp run-local -ts <project name> -f ./local-runtime/payload-local.yaml --docker

CLI will start up a new data process container, print out logs and return any data from the result agent.

For example, if we use the example from Quick Start, you will see a log in the output like this:

INFO saffron_process::task_finalizer::logging: Final result of DataProcess task [execution_id=AAAAAAAAAAAAAAAAAAAAAA,id=AAAAAAAAAAAAAAAAAAAAAA]:

{"status":"ok","taskId":{"executionId":"AAAAAAAAAAAAAAAAAAAAAA","id":"AAAAAAAAAAAAAAAAAAAAAA"},"response":{"message":"Hello, Arthur Dent!"}}

Purge Runtime Containers

Since every local data process execution creates a new container, you can clean up unused assets with this command:

docker rm $(docker ps -a -q --filter ancestor=public.ecr.aws/m0s8j1u6/saffron/simple-runtime:release-0.5.1)

This command does not work in Windows' Command Prompt. Please use PowerShell instead. Or you can manually delete all non-active containers in Docker Desktop.

Accessing Localhost Services

HTTP, database and file storage agents alike can access your localhost services via

host.docker.internal:<port>

For example, ftp://user:pass@host.docker.internal:21/path/filename.

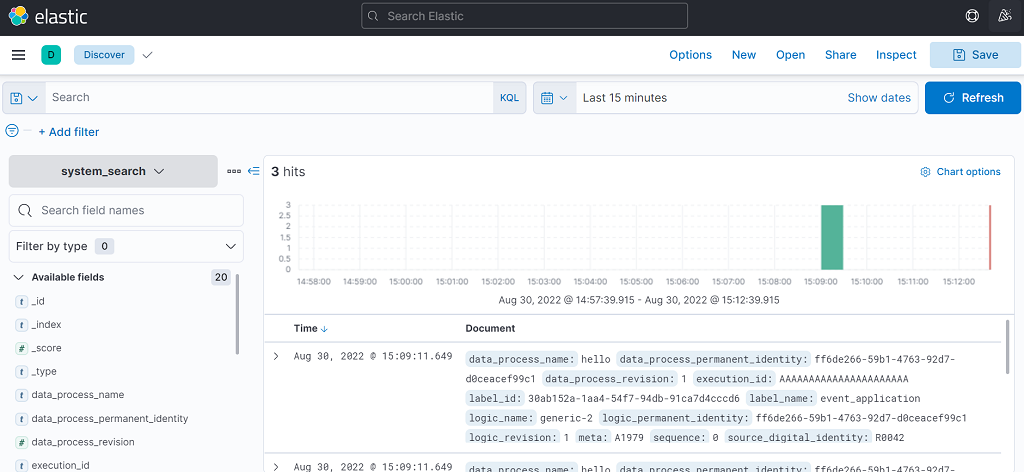

Accessing Local Event Logs

The local runtime comes with a Kibana service, just like the real LOC Core environment, which enables us to see event logs emitted by event agent without using data processes.

- Open http://127.0.0.1:5601 in your browser. (It will be ready only after the event store is up and running.)

- Go to the left-side menu, go to Analytics -> Discover.

- If this is the first time you use the local Kibana on your machine, click Create index pattern.

- Enter system_search in the search box and select timestamp in Timestamp field.

- Click Create index pattern again.

- Now go back to Discover. You should able to see event logs now (if there is any).

Below is an example of viewing events in Kibana:

The default display time range is 15 minutes.